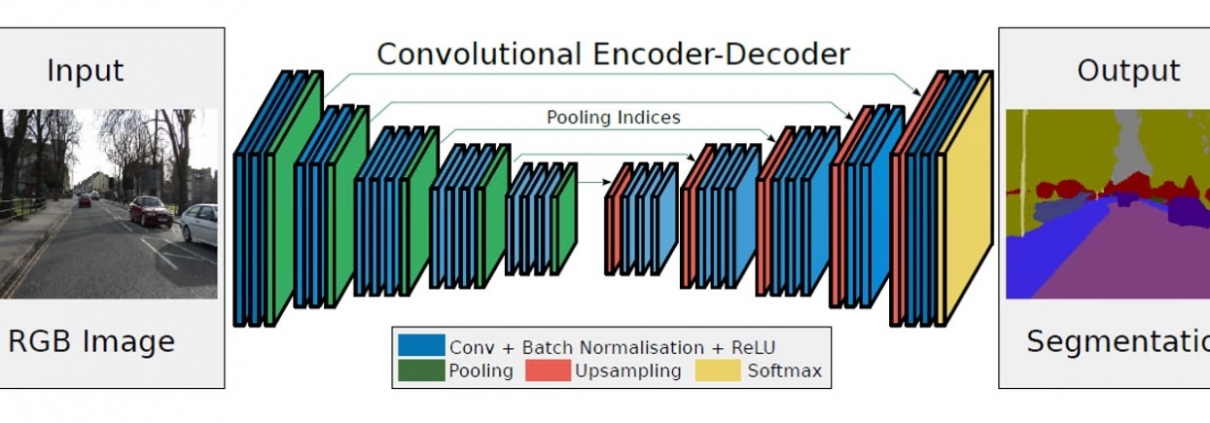

We also tested different algorithms for optimization, regularization, and different levels of learning regarding the model learning approach. Of course, the ideal configuration of the model also depends on many other parameters. Problems arose with the use of the TensorRT tool, which is used to optimize models and, consequently, faster inference time. Nevertheless, we were able to build models that could meet the real-time specification depending on the speed of the camera. In our case, the U-Net and ENet models performed lane segmentation on the Nvidia Jetson TX2 platform between 14 fps and 18 fps. In the future, we intend to optimize such models to achieve faster inference times and more ease of use. This is also a very active area of research, since the goal is to apply deep learning models to less powerful devices such as cell phones or even microcontrollers. It is also worth noting that the use of models for real-time operation depends not only on the model’s closing time, but also on the processing before and after the model is completed. E.g. in our case it was necessary to reduce the dimensions of the input RGB image to a dimension of 256×256. If it is necessary to convert the segmentation results back to the original dimension after inference, the corresponding processing operations take time. Therefore, it is often necessary to find a compromise between the output image from the camera and the input image into the model itself.