Research

A need for nonverbal communication

Embodied agents can be better communicators than disembodied voices, because theirs gestures and actions can reinforce and clarify conversation and help manage turn-taking more efficiently. For this multimodal communication to be effective, we need to develop language generators that support multimodal interaction. This means that speech must be coordinated with other communicative modalities such as gestures, eye gaze, and facial expressions. Beside more effective communication, embodiment of dialogue system helps an agent to take on a social role because users treat embodied agent more as another person. That is why users also expect virtual agents to use nonverbal communication. There are different types of nonverbal communication in human-human interaction like gesture, gaze, facial expression, posture, etc. Gesture is one of the most evident types of nonverbal communication. Gestures are motions and poses primarily performed with the arms and hands while speaking. While nonverbal communication have a positive effect on users, their timing and matching with speech is very important, since gestures, which do not match well with speech, have a negative effect on comprehension of embodied agents or humanoid robots.

Behavior Generation

Gesture Theory

McNeil classifies gestures into four distinct categories:

1. Iconics – Iconic gestures represent some aspect of the scene, for example physical shapes or direction of movements.

2. Metaphorics – Metaphoric gestures use motion to represent an abstract concept.

3. Deictics – Deictic gestures consist of pointing motions used to point to an object or orientation.

4. Beats – Beat gestures are repetitive rhythmic hand motions which are used for emphasis and are closely tied with prosody in verbal speech.

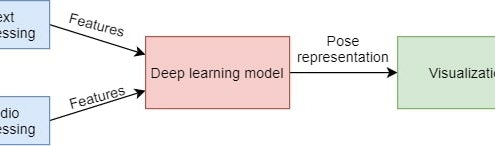

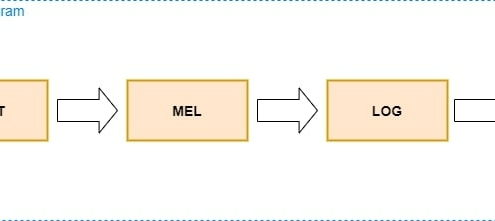

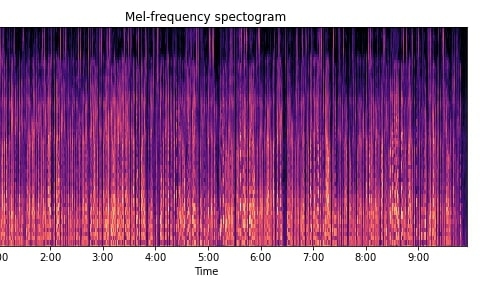

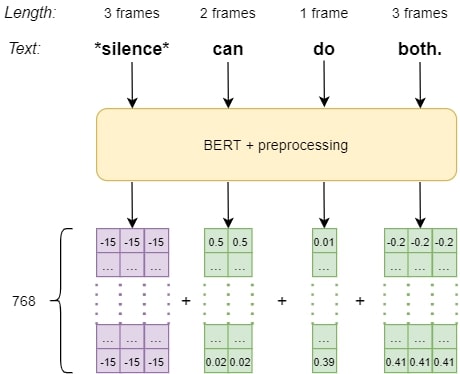

Features for training deep learning models

For deep learning models to be able to use input modalities like audio and text, we need to extract useful features from the raw information. If we are working with multiple modalities, then those modalities also need to be aligned. Most deep learning approaches only use one modality as input, mainly audio because gestures strongly correlate with speech, but as we already mentioned it is important for the model to also understand semantics of the speech to be able to generate semantic gestures. That is why some novel approaches propose models which use multiple modalities as input. Nowadays, the most popular feature representation for text are embeddings. There are e.g.: GloVe, FastText, BERT etc.

Pose Representation

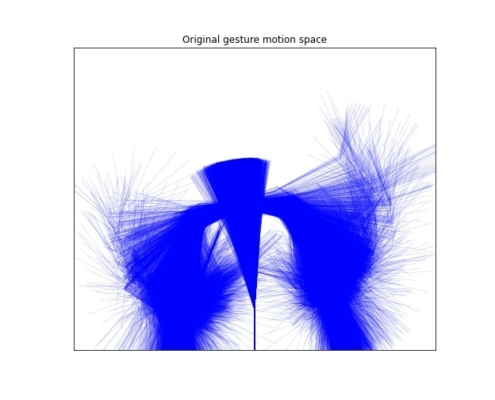

Most basic approach is to represent gestures in raw 2D or 3D coordinates. This is the approach that most current works use. But this is interesting area of research, and it is important to try also other representations.

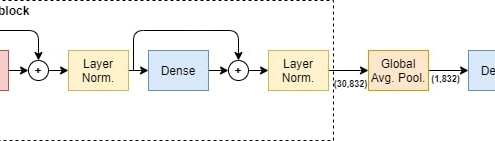

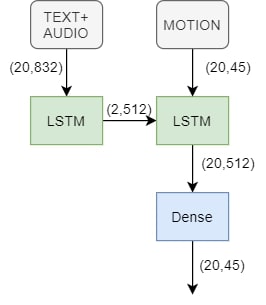

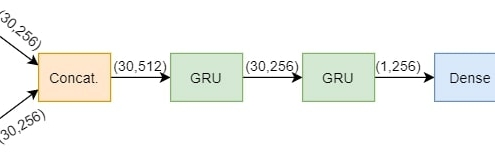

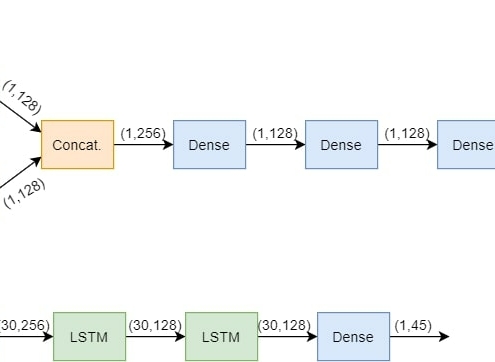

Deep Learning Architectures

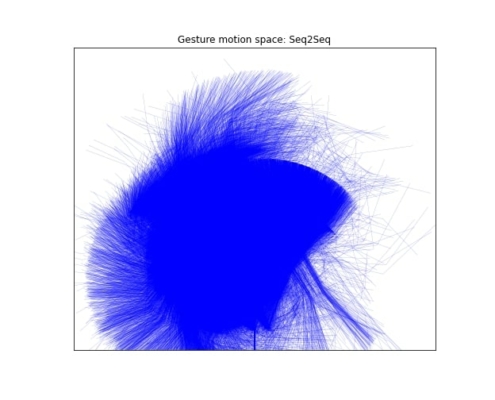

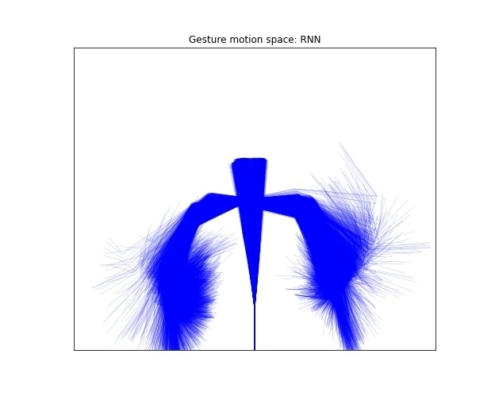

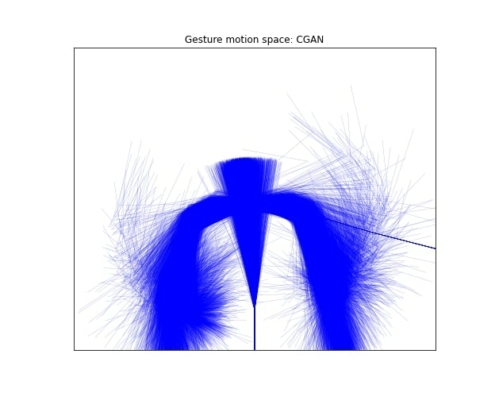

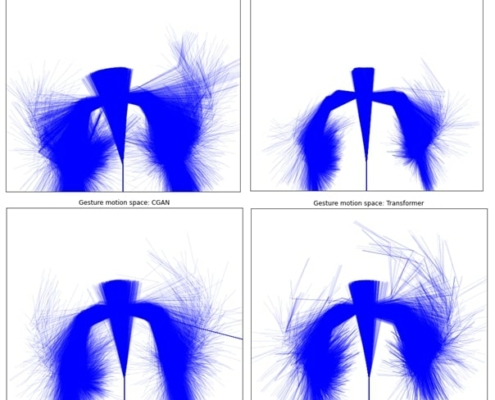

Generation and Evaluation

The evaluation of gesture generation results is quite tricky because the usual regression metrics like mean squared error are not suitable for this task. The reason is that an agent can gesticulate with one hand, but if we evaluate this gesture with the ground truth being done with other hand the mean squared error would be big, but in the subjective evaluation these gestures would be equivalent. That is why the researchers in the gesture generation field are doing subjective evaluation of the models. Here we didn’t conduct the subjective evaluation since the models used were only baselines, and the only subjective evaluation that was done was by the author of this report, when training the models and choosing architecture and hyperparameters.